Log Exports to Azure

Client and Audit log exports to Azure Blob Storage

Prerequisities

To use this feature, you'll need administrative access to an Azure Tenant (in order to create a Blob Container for receiving the log exports from Cloudsmith).

If you already have one type of export set up with us and don't require updating your role/permissions, please skip toExisting Setup.

New Setup

Next, you'll need to follow these steps:

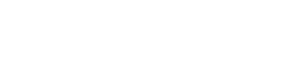

1. In the Storage Account you would like to use, create a Blob Container for the logs, such as cloudsmith-acmecorp-logs where you can replace acmecorp with your own organization name.

2. If using an existing container, pick a folder name for the Cloudsmith logs to go into, such as cloudsmith-logs. This is configured in the next step and configured on our side in tandem.

3. If planning to exports multiple types of logs (e.g. Client and Audit logs), let us know and we will make sure to use separate folders names inside of your Cloudsmith log export container (Note them down for later):

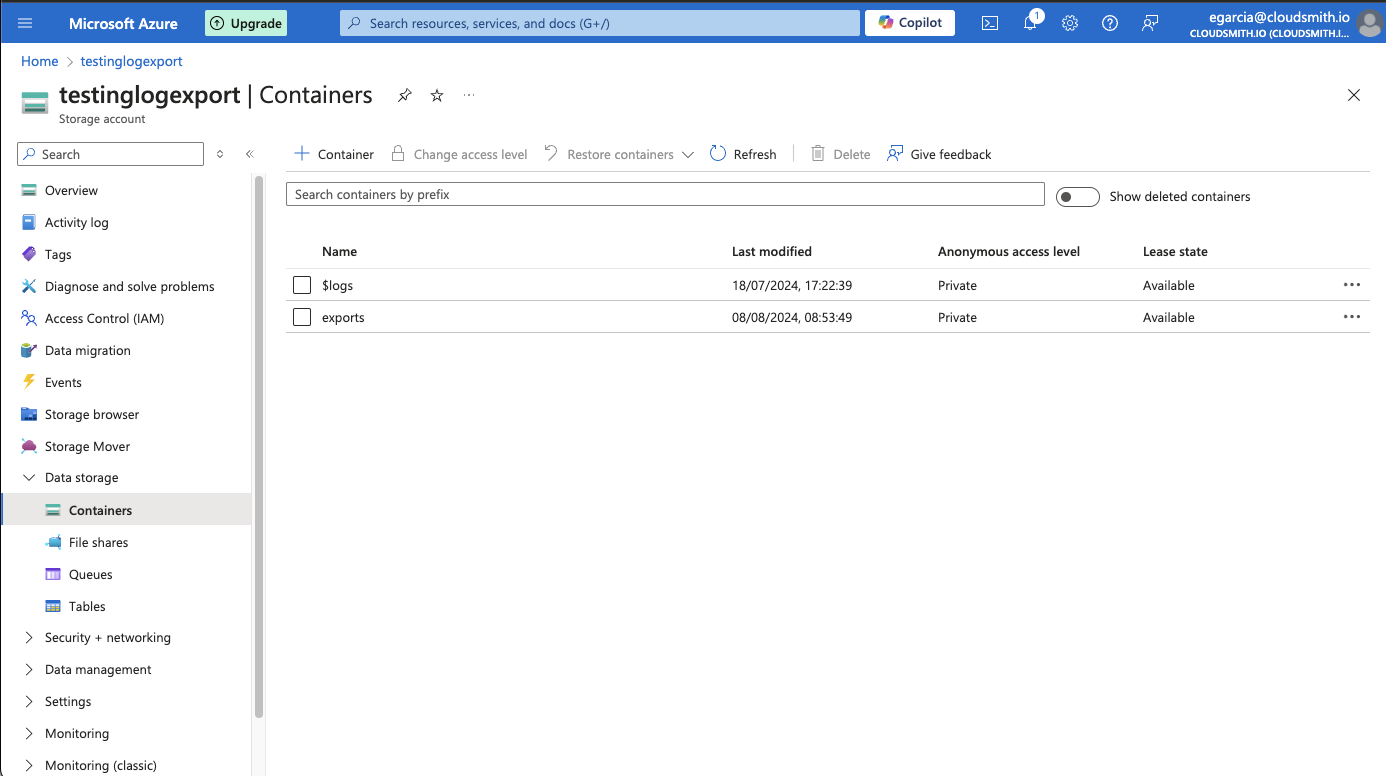

4. Create a Managed Identity and assign a Role to it that only has write access to the Blob Container you created in the previous step. Make note of the Managed Identity Client ID as we will need it for our next step.

5. Contact us to tell us your:

One container to rule them all!We recommend setting up one container with folders for each log type and use the same Azure Tenant, if you require each log type to have a different Azure Tenant, please let us know.

- Azure Tenant ID

- Azure Managed Entity Client ID

- Azure Storage Account Name

- Azure Blob Storage Container Name

- Azure Blob Storage Folder Name

- The log format that you want to export:

- JSON (Stream) (RECOMMENDED)

- JSON (Stream+TimeStamp)

- CSV

- Apache Style

- The log type that you want to export:

- Audit Logs

- Client Logs

- Prefix (folder names) for each type of log export

- Export Options:

- Entire org (RECOMMENDED)

- Selected repositories only

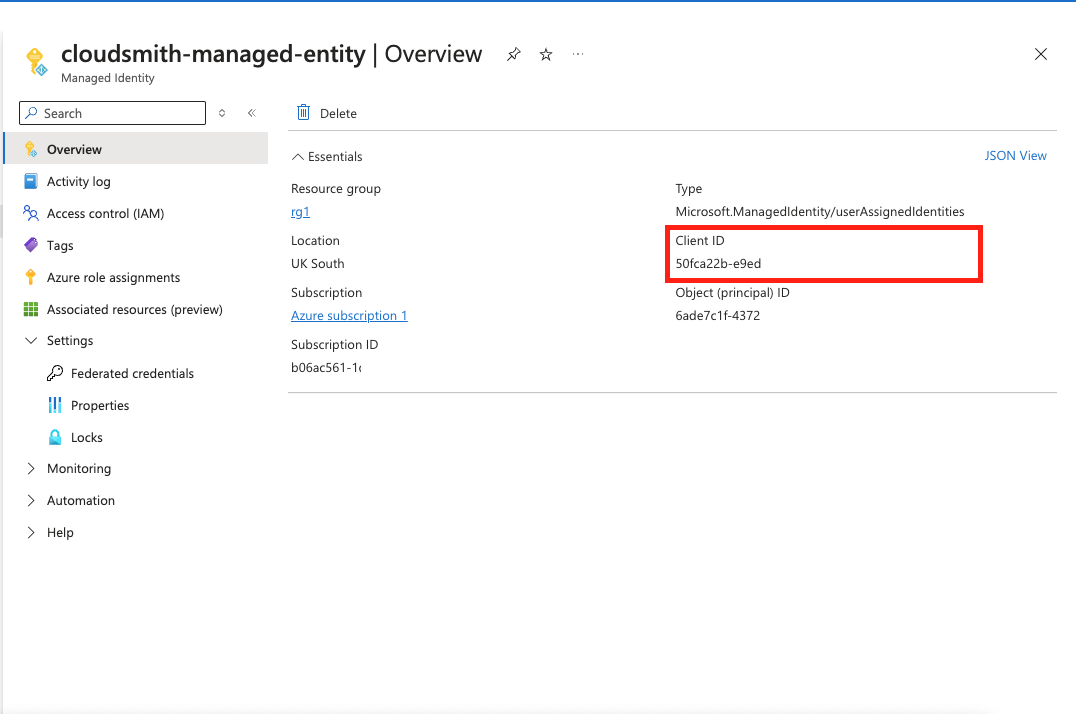

6. We will use an OIDC Token to authenticate against your Azure Managed Entity. For this, you will need to create a Federated Credential in the Managed Entity you previously created.

-

Go to Managed Identities > Federated Credentials, and create a new one.

-

We will send you all the details you need to fill in.

Existing Setup

Note: We will use your existing Azure Tenant for new log typesIf you already have an export set up with us, we will use the same account that you have initially provided us with. If you wish to use a new account for a new type, please go through the New Setup and provide relevant information.

If you already have Blob Container exports setup with us, we will use the same Azure account that you have provided us with. The only change that may be required is a change of folders inside your Cloudsmith log container to separate the different log types.

For example if your current solution has no folders inside of the Cloudsmith export container and all exports go to /<export>, we recommend creating folders:

- client-logs

- audit-logs

e.g. Client logs will export to<container>/<client-logs>/<client-log-export>

Please let us know if you plan to separate the logs into different folders in order for us to reflect the changes in both the new export requested and the old one.

Summary of Information Required

You should have provided us with the following information:

- Azure Tenant ID

- Azure Managed Entity Client ID

- Azure Storage Account Name

- Azure Blob Storage Container Name (e.g.

cloudsmith-acmecorp-logs). - Azure Blob Storage Folder Name (e.g.

cloudsmith-logs), if any. - The type of logs you would like to export (e.g. Client and/or Audit logs).

- The format of the logs you'd like to export (e.g. Apache-style, CSV, JSON Stream, JSON Stream + Timestamp, etc.)

Which format should I choose?It depends! Each format is "streaming," meaning there's one line per entry for each download rather than a large object. In terms of detail, the Apache (or Nginx) format has the least to conform with those formats, CSV has the next level of detail but is limited due to columns, and JSON has the most level of detail as a structured format.

We recommend choosing JSON Stream + Timestamp, in which a JSON blob representing the download is prefixed with a timestamp, making this nice to parse and import into your favorite Business Intelligence (BI) or Security Information Event Monitoring (SIEM) tooling.

How often are logs exported?Log exports currently run on an hourly interval. However, we’re actively working on supporting shorter intervals in the future - stay tuned for updates!

We should have provided you with the following information to configure a Federated Credential:

- Token Issuer URL

- Identity ID

- Token Audience

What can I include/exclude?It is possible to include or exclude the following:

- EULA acceptance data.

- location-based (Geo) data.

- IP data.

- namespace identifiers/paths.

- repository identifiers/paths.

Data Provided

Client Logs

Some of the data provided includes:

- Edge Location: Nearest CDN Edge Node Location (e.g., "LHR61-C2")

- EULA ID: Unique identifier for EULA accepted (if any)

- EULA Number: Revision of EULA accepted (if any)

- IP Address: IP of the client

- Host: Hostname for the request (e.g. your download domain)

- Method: HTTP Method (e.g. "GET")

- Geo/IP Fields: E.g., "City," "Continent", e.g. enriched based on IP (we pay for this)

- Package Identifier: Unique identifier for downloaded package (if any)

- Package Name: Name for the downloaded package (if any)

- Package Version: Version for downloaded package (if any)

- Protocol: HTTP Protocol Used (e.g. "https/1.3")

- Referer: HTTP Referrer

- Request ID: Globally unique identifier for the request

- Status: HTTP Status Code (e.g. "200")

- Time Taken: Time taken to service request, in seconds

- Token ID: Unique identifier for entitlement token used (if any)

- Token Name: Name for entitlement token used (if any)

- URI: Uniform Resource Identifier, i.e. path, for the request

- User Agent: HTTP User Agent sent by the client

- User ID: Unique identifier for the client user (if any)

- User Name: Name for the client user (if any)

Audit Logs

- Actor: The user or system that performed the action.

- Actor IP Address: The IP address of the actor.

- Actor Kind: The type of actor, such as "user" or "system".

- Actor Location: The location of the actor, as determined by their IP address.

- Actor Slug Perm: A unique identifier for the actor, used for permissions.

- Actor URL: The URL of the actor's profile.

- Context: The context in which the event occurred, such as "web" or "api".

- Event: The type of event that occurred.

- Event At: The date and time the event occurred.

- Object: The object that was affected by the event.

- Object Kind: The type of object, such as "user" or "file".

- Object Slug Perm: A unique identifier for the object, used for permissions.

- Target: The target of the event, such as the user who was affected.

- Target Kind: The type of target, such as "user" or "file".

- Target Slug Perm: A unique identifier for the target, used for permissions.

- UUID: A unique identifier for the event.

Format Examples

JSON (Stream) [recommended]

One JSON record per line, without a header line:

{"bytes": 2150, "datetime": "2024-05-30T15:14:27+00:00", "edge": "us-east-1", "eula": null, "format": "Terraform", "host": "dl.cloudsmith.io", "ip_address": "3.234.139.83", "location": {"city": "Ashburn", "continent": "North America", "country": "United States", "country_code": "US", "latitude": "39.046900", "longitude": "-77.490300"}, "method": "GET", "namespace": "cloudsmith", "package": {"identifier": "wKSkBFHpsNWB", "name": "cloudsmith-terraform-example", "tags": {"info": ["local"]}, "version": "1.0.1717082040651684"}, "recorded": "2024-05-30T15:42:56+00:00", "referer": "", "repository": "testing-private", "request_id": "8v19hTgCw3rVWw9gl1QiPuSGf3JOpKMktSNSk0vn-rTSqcmCx7bYqw==", "status": 200, "token": null, "uri": "/terraform/terraform-local-cloudsmith-terraform-example-1.0.1717082040651684.tar.gz", "user": {"identifier": "svHuImXP38Ev", "username": "end-to-end-tests"}, "user_agent": {"browser": "Go-http-client 2.0", "device": "Other", "os": "Other", "raw": "Go-http-client/2.0"}JSON (Stream+Timestamp)

One JSON record per line, prefixed by a timestamp, separated by a single whitespace character*, without a header line:

2024-05-30T15:14:27+00:00 {"bytes": 2150, "datetime": "2024-05-30T15:14:27+00:00", "edge": "us-east-1", "eula": null, "format": "Terraform", "host": "dl.cloudsmith.io", "ip_address": "3.234.139.83", "location": {"city": "Ashburn", "continent": "North America", "country": "United States", "country_code": "US", "latitude": "39.046900", "longitude": "-77.490300"}, "method": "GET", "namespace": "cloudsmith", "package": {"identifier": "wKSkBFHpsNWB", "name": "cloudsmith-terraform-example", "tags": {"info": ["local"]}, "version": "1.0.1717082040651684"}, "recorded": "2024-05-30T15:42:56+00:00", "referer": "", "repository": "testing-private", "request_id": "8v19hTgCw3rVWw9gl1QiPuSGf3JOpKMktSNSk0vn-rTSqcmCx7bYqw==", "status": 200, "token": null, "uri": "/terraform/terraform-local-cloudsmith-terraform-example-1.0.1717082040651684.tar.gz", "user": {"identifier": "svHuImXP38Ev", "username": "end-to-end-tests"}, "user_agent": {"browser": "Go-http-client 2.0", "device": "Other", "os": "Other", "raw": "Go-http-client/2.0"}

Future CompatibilityTo ensure compatibility, please parse the "whitespace character" as a space OR tab (for future compatibility).

CSV

Starts with a header line, followed by one CSV record per line:

datetime,repository,status,method,uri,host,ip_address,bytes,city,country,edge,eula,format,package,recorded,referer,request_id,token,user,user_agent

2021-04-01T19:40:55+00:00,cloudsmith/testing-private,200,GET,/rpm/fedora/29/x86_64/cloudsmith-redhat-example-1.0.16173057145-1.x86_64.rpm,dl.cloudsmith.io,82.1.4.8,10421,Newmarket,GB,LHR61-C2,,RedHat,vmN1OlMDi3Iy,2021-04-01T19:46:23.817658+00:00,,dZ3DmJ8yHU36FyH9uHoyWH4LocmlkzdKXSEuOBmnvLhA4ZF7exa5Qw==,wKSkBFHpsNWB,eP1B3YCtTlJX,libdnfApache Style

3.222.115.18 - t-6GXPs3OxkOio [30/May/2024:15:15:16 +0000] "GET /deb/ubuntu/dists/bionic/main/source/by-hash/SHA256/c6f31d4574a468d87347ea68b1420d9d5cbc7f1704df40b4ec45c3a7b1cb11e4 " 200 2247 - "Debian APT-HTTP/1.3 (1.6.17)" eula:noneWHAT’S NEXT

Tell your users what they should do after they've finished this page

Updated 5 months ago